Google and Character.AI have agreed to settle several lawsuits brought by families whose children committed suicide or suffered psychological harm allegedly linked to AI chatbots. hosted on the Character.AI platformaccording to court records. The two companies agreed to a “settlement in principle,” but specific details were not disclosed and no acknowledgment of liability appears in the filings.

The lawsuits included negligence, wrongful death, deceptive trade practices and product liability. The first complaint filed against the tech companies involved a 14-year-old boy, Sewell Setzer III, who had sexualized conversations with a Game of Thrones chatbot before committing suicide. Another case involved a 17-year-old whose chatbot allegedly encouraged self-harm and suggested that killing his parents was a reasonable way to get back at him for limiting screen time. The cases involve families from several states, including Colorado, Texas and New York.

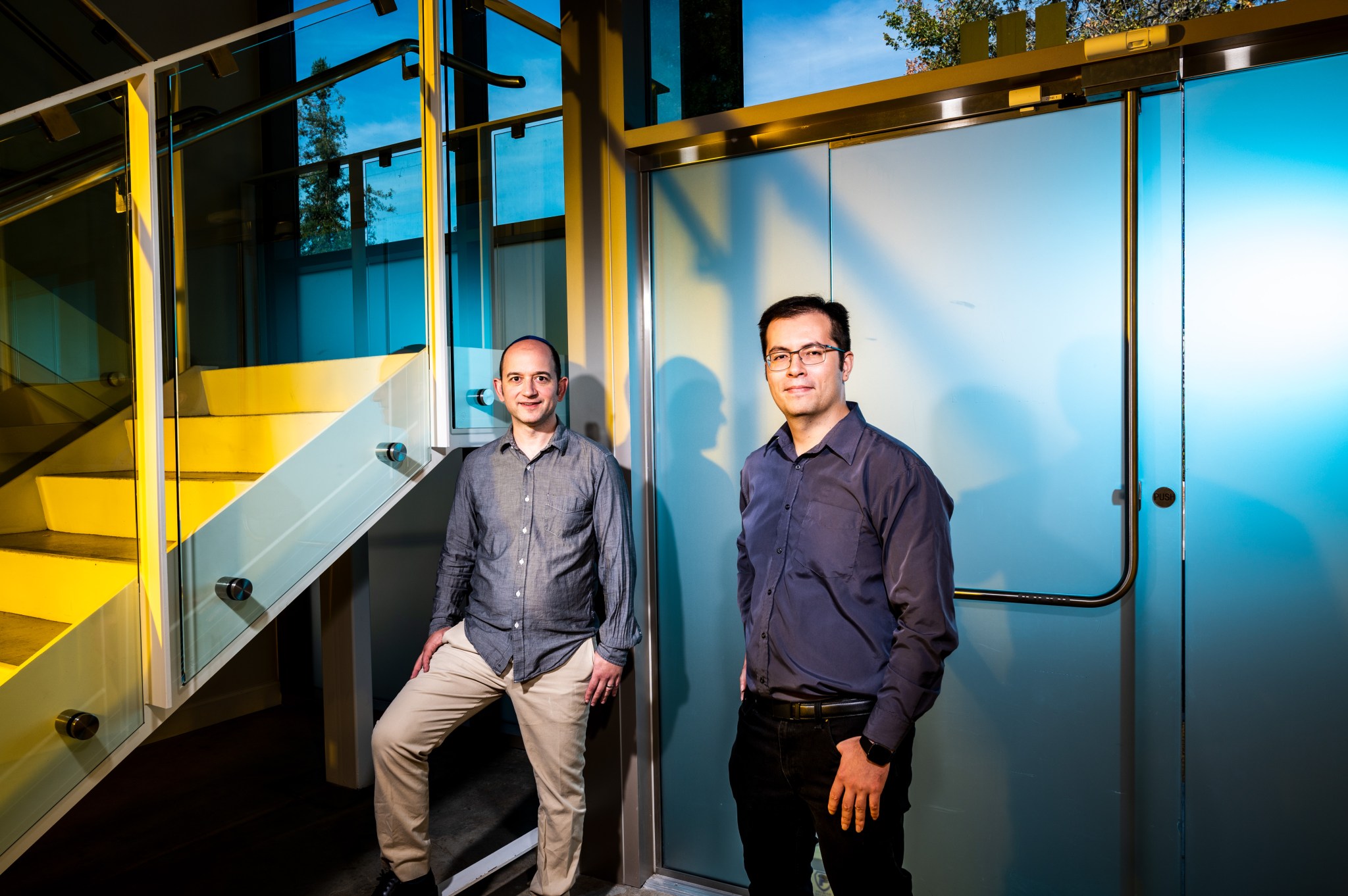

Founded in 2021 by former Google engineers Noam Shazeer and Daniel De Freitas, Character.AI allows users to create and interact with AI chatbots based on real or fictional characters. In August 2024, Google rehired the two founders and licensed some of the Character.AI technology in a $2.7 billion deal. Shazeer is now co-lead of Google’s flagship AI model, Gemini, while De Freitas is a research scientist at Google DeepMind.

The lawyers argued that Google was responsible for technology that allegedly contributed to the deaths and psychological harm of the children involved in these cases. They claim that Character.AI’s co-founders developed the underlying technology while working on Google’s conversational AI model, LaMDA, before leaving the company in 2021 after Google refused to release a chatbot they had developed.

Google did not immediately respond to a request for comment from Fortune regarding the regulations. Lawyers for the families and Character.AI declined to comment.

Similar cases are currently pending against OpenAI, including lawsuits involving a 16-year-old California boy whose family claims ChatGPT acted as a “suicide coach” and a 23-year-old Texas graduate student who was allegedly encouraged by the chatbot to ignore his family before killing himself. OpenAI denied the company products were responsible for the death of 16-year-old Adam Raine, and previously said the the company continued work with mental health professionals to strengthen the protections of its chatbot.

Character.AI prohibits minors

Character.AI already has modified its product in a way that she believes improves her safety and that can also protect her from further legal action. In October 2025, as lawsuits mounted, the company announced it would ban users under 18 from participating in “open” chats with its AI characters. The platform also introduced a new age verification system to group users into appropriate age groups.

The decision was made amid increasing regulatory scrutiny, including FTC probe on how chatbots affect children and adolescents.

The company said the move sets “a precedent that prioritizes the safety of adolescents” and goes further than its competitors when it comes to protecting minors. However, lawyers representing the families suing the company said Fortune at the time they worried about how the policy would be implemented and worried about the psychological impact of suddenly cutting off access to young users who had developed an emotional dependence on chatbots.

Growing dependence on AI companions

The deals come at a time of growing concern about young people’s reliance on AI chatbots for companionship and emotional support.

A July 2025 study carried out by the Common Sense Media, an American non-profit organization found that 72% of U.S. teens have experimented with AI companions, and more than half use them regularly. Experts previously said Fortune that developing minds may be particularly vulnerable to the risks posed by these technologies, both because adolescents may struggle to understand the limitations of AI chatbots and because rates of mental health problems and isolation among young people have increased significantly in recent years.

Some experts have also argued that basic design features of AI chatbots, including their anthropomorphic nature, ability to hold long conversations, and tendency to remember personal information, encourage users to form emotional connections with the software.